import torch

import matplotlib.pyplot as plPyTorch and Autograd ✅

1 PyTorch

PyTorch is a drop-in replacement of NumPy with several key extensions.

PyTorch supports autograd – a feature of numerical libraries that automatically generates gradients using a technique known as backpropagation.

PyTorch supports hardware acceleration so that the matrix computations can be carried out efficiently on specialized hardwares such as Graphics Processing Units (GPU) or Tensor Processing Units (TPU) when they are available.

1.1 Drop-in replacement of NumPy

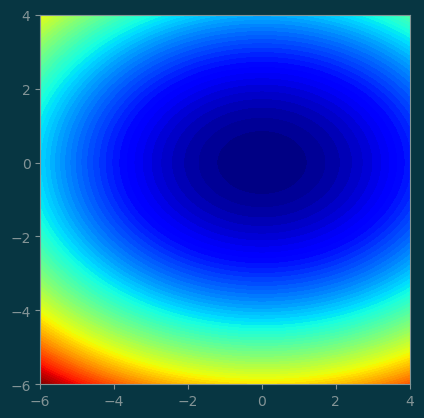

def f(x):

return x[0] ** 2 + 2 * x[1] ** 2xs = torch.linspace(-6, 4, 100)

ys = torch.linspace(-6, 4, 100)

xx, yy = torch.meshgrid(xs, ys, indexing=None)

coord = torch.concatenate([xx[:,:,None], yy[:,:,None]], axis=-1)

coord = coord.reshape(-1, 2).T

z = f(coord)

z = z.reshape(100,100)

pl.set_cmap(pl.get_cmap('jet'))

pl.contourf(xx, yy, z, levels=100)

ax = pl.gca()

ax.set_aspect('equal');

1.2 Converting torch.tensor to numpy

x = torch.tensor([1., 2.])

x.numpy()array([1., 2.], dtype=float32)y = f(x)

ytensor(9.)y.numpy()array(9., dtype=float32)y.item()9.02 Autograds

In PyTorch, tensors can be configured during creation to track any gradient with respect to itself.

Given something like:

x:requires_grad ---> u ---> v

f gIf x is created with requires_grad=True, then we can always collect the gradient of \(\nabla v\) or \(\nabla u\) with respect to x.

#

# collect the gradient

#

x = torch.tensor([-5., -2.], requires_grad=True)

y = f(x)

ytensor(33., grad_fn=<AddBackward0>)#

# all values derived from `x` can trigger .backward()

# to propogate the gradient to `x`.

#

y.backward()#

# the `y.backward()` backpropagates gradient vectors

# to all tensors with `requires_grad=True` that `y` depends on.

#

x.gradtensor([-10., -8.])#

# It's important to know that gradients are accumulated

# on the tensors.

#

z = f(x)

z.backward()x.gradtensor([-20., -16.])#

# we can zero the gradient manually

#

x.grad.zero_()tensor([0., 0.])x.gradtensor([0., 0.])3 PyTorch Computational Graph Management

A collection of computational graphs are maintained in order to manage the gradient propagation feature.

This is not only expensive but also causes issues when we are performing non-optimization related computations (such as incrementing counters).

PyTorch provides several features to manage the computational graph so we can:

- Temporarily disable autograd tracking

- Create copies of tensors that do not participate in any computational graph

3.1 A sign of trouble

x = torch.tensor([5.0, 2.0], requires_grad=True)#

# A gradient update

#

f(x).backward()

x.sub_(x.grad * 0.01)RuntimeError: a leaf Variable that requires grad is being used in an in-place operation.Remember that, by default, all computation involving x will require gradient tracking. Any in-place modifications of x will cause a recursive loop in the computational graph, and thus is not allowed during gradient tracking.

3.2 Disable gradient tracking in context

The solution is to create a context in which gradient tracking is disabled.

with torch.no_grad():

x.sub_(x.grad * 0.01)

xtensor([4.6000, 1.6800], requires_grad=True)3.3 Another sign of trouble

x = torch.tensor([-5., -2.], requires_grad=True)

y = f(x)

print("Output of f=", y.numpy())RuntimeError: Can't call numpy() on Tensor that requires grad. Use tensor.detach().numpy() instead.We cannot convert a tensor that is currently participating in gradient tracking.

3.4 Detached copy

The solution is to make a detached copy of the tensor.

A detached copy is a copy of the tensor that is not participating in any computational graph.

output = y.detach()

print("Output of f=", output.numpy())Output of f= 33.0